Beyond the CAP Theorem - consistency models

tldr; Consistency models describe the consistency guarantees between processes in a distributed system. They each have performance bounds. The CAP Theorem describes the most all-or-nothing of these where linearizable consistency comes at a cost to availability. Other common and useful consistency models to know are eventual and causal consistency.

The CAP Theorem describes a fundamental tradeoff all distributed systems must make when the system fails to communicate internally - the system can either respond to requests with a potentially inconsistent view of the data to maintain Availability, or sacrifice Availability to preserve Consistency.

This is a simple, high-level description of an inescapable tradeoff. However, the rigorous reader will be asking what Availability and Consistency actually mean. These terms conceal lower-level bits of exchange in the tradeoff space. These bits are formally specified with “consistency models”.

Consistency models describe the different consistency guarantees distributed systems can make, each of which has an upper bound on performance or “Availability”. Generally, “stronger” consistency means worse performance. The CAP Theorem uses the strongest consistency model as its definition for “Consistency” - linearizability1. It also takes the least granular notion of performance for its definition of “Availability” - the system is either fully available, or not. So, while useful, the CAP Theorem only describes the tradeoff at the coarsest possible level - either the system is as strongly consistent as possible, or as available as possible.

In distributed systems, data can be concurrently read and written in multiple processes. These operations happen in real-world time, but the system doesn’t know the real-world time at which they begin or end - computers only have access to a local clock, and the clocks on different computers are not synchronized. To define consistency is to define how the system handles ordering these operations given the lack of a shared clock. Consistency models define the ordering guarantees the system makes considering all the possible orders in which operations may appear to each process, and what coordination is required between processes to make those guarantees. Ordering guarantees can be stronger or weaker, and require blocking or nonblocking coordination (or none at all).

The linearizability consistency model describes a system that behaves as though it does know the real-world ordering of operations, as if the operations were occurring within a single global clock-space. Because there is in fact no global clock-space, behaving like there is one requires a synchronization protocol - the processes have to coordinate in a blocking way to preserve clock synchrony. When communication fails, this synchronization can’t occur.

Linearizability has the benefit of matching most people’s expectation of what it means to be “consistent” and being easy to reason about, but in some contexts it is stronger than required and the cost to performance is too high. This is where other consistency models are useful - some weaker models don’t require communication at all times, and therefore perform better during failures.

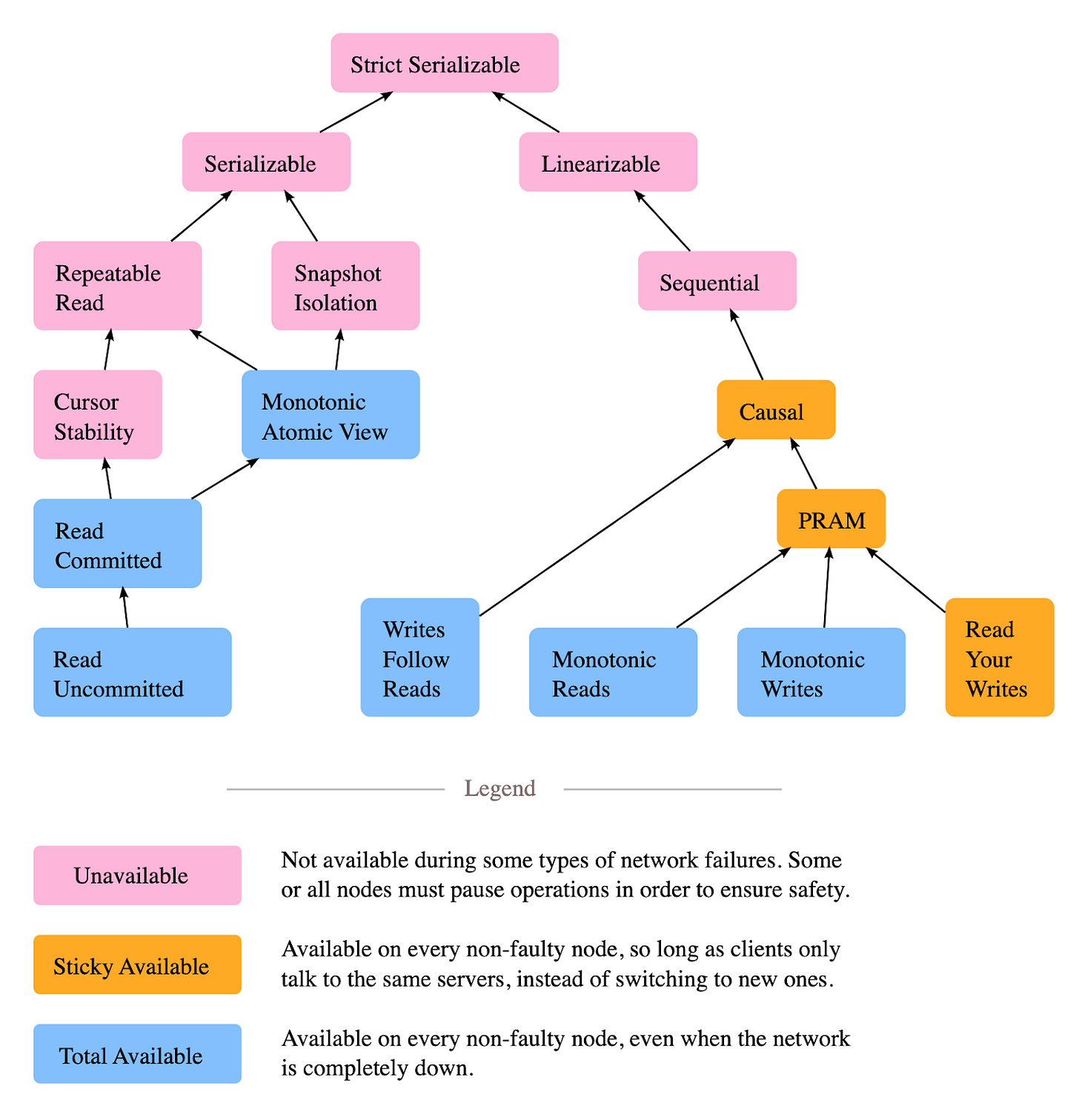

Some useful alternative consistency models are causal consistency and eventual consistency. The most useful resource I have found that explores the details and tradeoffs of consistency models is provided by Jepsen (they omit eventual consistency, which is not uncommon in the literature because it is quite weak). The following image does a great job of highlighting which models have which availability characteristics.

The left of the tree concerns distributed transactions, which are most relevant to database design. The right side will be more relevant to distributed application design. Jepsen frequently references Consistency in Non-Transactional Distributed Storage Systems by Viotti and Vukolić, which is a very thorough resource containing more consistency models and more details (including eventual consistency).

Another great read on this topic is A Critique of the CAP Theorem by Martin Kleppmann. In this paper, Kleppmann advocates the terminology “delay sensitivity” to describe the sensitivity of common consistency models to network delays, for both read and write operations. Something I find interesting is that the sequential consistency model, which provides very strong consistency guarantees, can be implemented to be insensitive to delays for either reads or writes, but not both. This highlights just how nuanced this tradeoff space really is.

As you can see, there is a lot to digest here. Here’s my quick and dirty take on some key, common consistency models and their tradeoffs:

Linearizability - strong, simple, but poor performance

The strongest consistency model, and a good choice when correctness is really important. It has the huge benefit of being easy to reason about, and matching expectations. It’s also fairly simple to implement. The downside is that no process can proceed when there’s an internal communication failure. Even in healthy conditions, all operations are exposed to added latency from communicating with other processes while they synchronize.

Eventual Consistency - good performance, simple, but poor correctness

I have often heard this referenced as the silver bullet solution to the CAP Theorem in industry, but it’s actually one of the weakest models. Eventual consistency only guarantees that data stores eventually converge to the same value. Sometimes this can be as simple as propagating operations asynchronously with at-least-once (if the operations are commutative and idempotent) or exactly-once (if the operations are commutative but not idempotent) delivery. However, since eventual consistency provides no ordering guarantees, operations applied out of order may result in temporary anomalous states - states that are inconsistent with the actual order that operations occurred. This can be a major downside, as you lose a lot of trust in your data if it enters states it never really should be in, even temporarily. So it’s not really a silver bullet. Clients can proceed during internal outages, and it’s simple, which are its major upsides.

Causal Consistency - good correctness, good performance, but complex

Causal consistency respects the causal dependencies between operations in its ordering. This means out-of-order operations are essentially not applied until their dependencies are received. Also sometimes called “strong eventual consistency”, data stores eventually converge in this model, and don’t ever enter intermediate anomalous states. Clients can proceed during internal outages, provided they communicate with the same process. The major downside to causal consistency is the complexity of implementation and the alignment required between processes (and therefore the people working on those processes, which can be separate teams working on separate services) to implement it correctly. Every operation must include some additional metadata about its dependencies, and every client must understand how to interpret that metadata when rendering the current value.

Sometimes other consistency models are used to define Consistency in discussions of the CAP Theorem, but the original model discussed by Gilbert and Lynch is linearizability (also called atomic consistency in this paper). Furthermore, while linearizability is commonly recognized as the strongest consistency model for read/write object, the Jepsen diagram includes Strict Serializable as an apparently stronger model for transactional (multiple operations on multiple read/write objects). As a simplification, this article doesn’t get into the differences between distributed read/write and transactional objects. Transactional objects are most relevant for database design, whereas application protocols typically work at the read/write object level.